Caching: The Missing Piece in Your App Development Puzzle

When should you use caching?

There are only two hard things in Computer Science: cache invalidation and naming things.

The above statement by Phil Karlton has acquired some sort of legendary status in software development circles. And not without reason.

Caching has the potential to be the most important piece in your app development puzzle.

It can also turn into a nightmare if not utilized properly.

In today’s post, you’ll get to understand the need for caching and when should you use it in your application.

1 - What is Caching?

A cache is a collection of items.

In the real world, you can think of a cache as a stockpile of something that is useful. Something that you need to keep handy for quick access in a time of need. For example, a cache of medicines or a stock of food items.

In the context of software engineering, the meaning isn’t so different.

Think about a bunch of bestseller products on an e-commerce website, a list of country codes or the most popular posts on your blog. All of them are things that are frequently needed by the users.

It is a particularly frustrating situation when the user requests the same data multiple times and our application goes to the database to retrieve the data every single time.

Why not store the frequently accessed data in temporary storage that is faster than database or disk access?

This temporary location is the cache.

In essence, a cache is a data-storage component that can be accessed faster than the original source of the data. Just like a real cache, the software cache is also a temporary location of information.

The ultimate goal of caching in app development is to enable faster-repeated access with less effort.

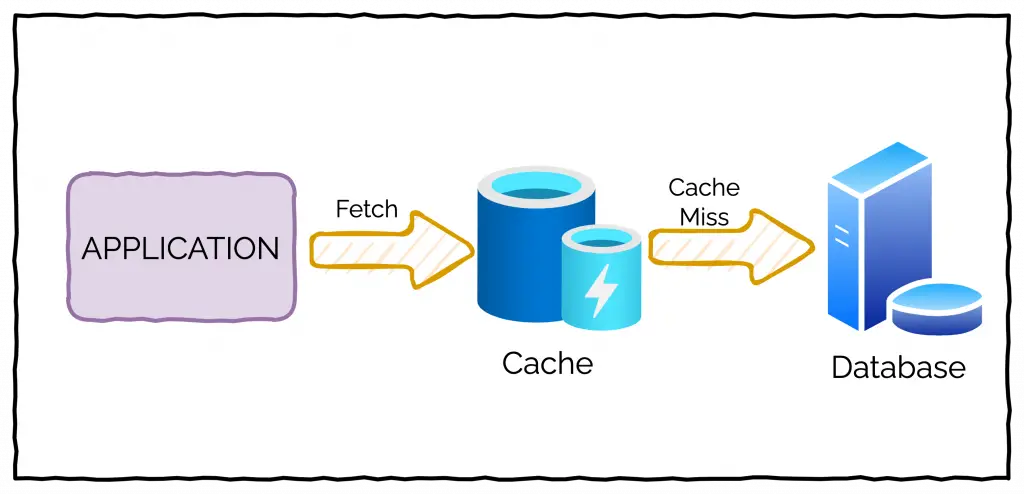

Check the below illustration that shows the role of caching in a typical application.

The general idea is that when an application needs to fetch some data, it first checks within the cache. If it finds the information in the cache, it can access the data much faster when compared to getting it from a slower storage medium.

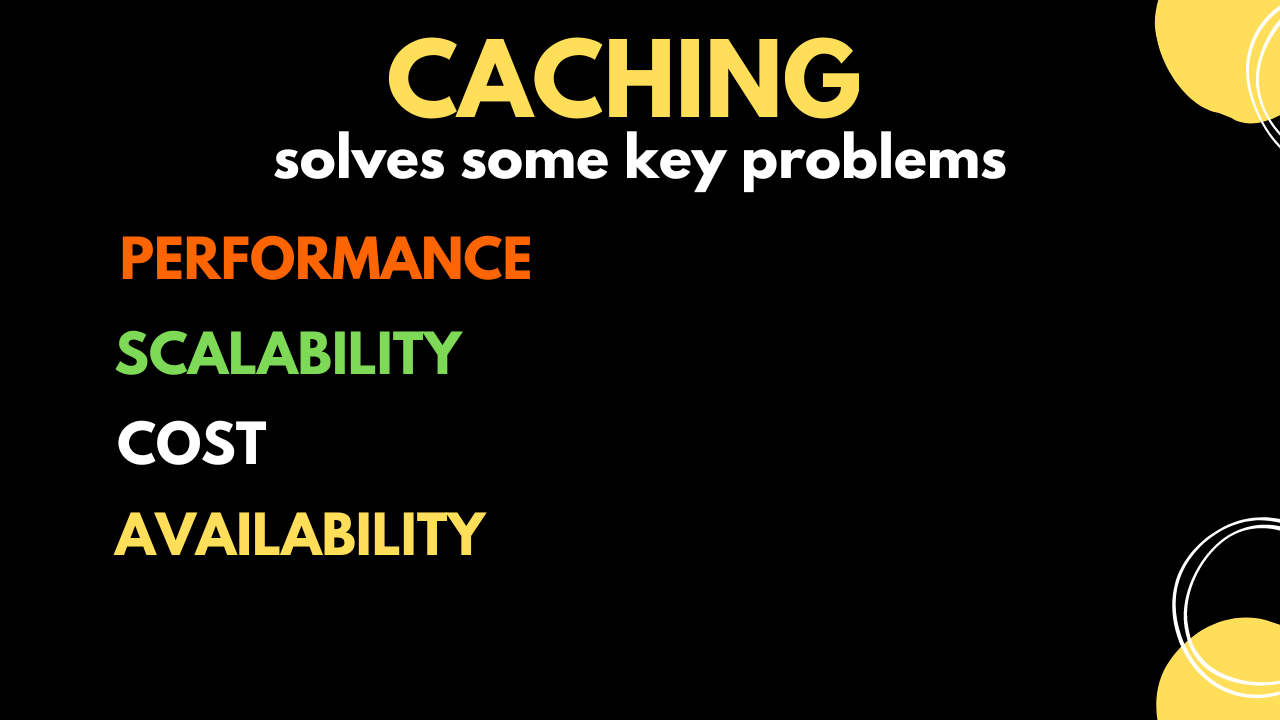

2 - Problems solved by Caching

If caching is such an important component in application development, what kind of problems does it solve?

Let’s look at a few key ones:

Performance – In modern software development, latency is the new outage. Caching improves the performance of your application by reducing latency.

Scalability – When you scale up an application, resources can get constrained. This hampers future scalability. Caching helps reduce the load on the servers by offloading some of it onto the browser cache or a server-side cache.

Cost – Resources cost money and increase the running cost of a system. Databases and servers are typically costly. Caching helps reduce the overall cost by moving the workload from costlier resources to cheaper ones.

Availability – It might not be obvious, but caching can also increase a system’s availability. For example, a resource needed to perform a complex calculation may not be available all the time. However, if the result has already been cached, you can return the cached result instead of denying the service to the end user.

3 - When to use Caching?

Though caching solves a bunch of important problems in modern app development, you must not decide to use it blindly.

Any tool or technology is beneficial only when it provides some value over the current situation.

For caching to be valuable, ask yourself the below questions.

Is the operation I’m trying to cache slow?

Caching the result of an operation is beneficial only if the operation is really slow or resource-intensive.

For example, let’s say you are trying to use an external Weather API to retrieve some information. The Weather API may be slow or it may be expensive to use due to usage limits (in other words, resource-intensive).

In this case, you would do well to implement a cache to store the results of the Weather API query. When a user makes a query, you can first check if the data is available in the cache and call the slow and costly Weather API only when needed.

As a rule of thumb, always check whether you are trying to access a slow external API or a database. If yes, only then consider the use of caching. Otherwise, you would end up caching for no benefit and additional complexity.

Is the cache actually faster?

Don’t cache just for the sake of caching!

The cache must be able to store and retrieve faster than the original source. Alternatively, it should consume fewer resources.

However, sometimes it is not immediately clear if caching will be advantageous. To make a decision, try to set up a test environment where you can simulate a high volume of traffic. Run tests with and without the cache and compare the results. If the performance improves due to caching, then and only then go for a caching-based solution.

Ultimately, there should be some quantitative advantage of using cache.

Is the data I’m trying to cache dynamic?

Suppose your cache stores the results of a search query. When a user makes a new search query, you retrieve the results and also store them in the cache. On subsequent requests for the same query by other users, you return the cached results.

While caching such data, ask yourself how long until the cached result becomes stale.

If the cached data becomes stale very frequently and you have to invalidate it, you might not get sufficient advantage from caching. Items that don’t change from request to request are better candidates for caching.

Is the data frequently accessed?

Let’s consider our earlier example of an e-commerce platform.

If you think there is a popular product in your catalog, its product page may receive a ton of requests. If the details are fetched from the database every single time, your application may perform poorly due to overloading. This is an ideal scenario to explore the use of cache.

As a rule, always ask yourself how frequently a piece of data is needed.

The more times it is needed, the more effective the use of cache will be.

To answer this question, you need to have a really good understanding of the statistical distribution of data access from your data source.

Caching is more likely to be effective for your use case when your data has a normal bell-curve distribution instead of flat distribution in terms of access.

Does the original operation have side effects?

If you want to cache the result of an operation, it must not have side effects. For example, the operation should not store data, make changes to other systems or control some software or hardware item.

If the result of such operations is cached, you will end up breaking your application when the requests are served from the cache and the side effects are ignored.

That’s it

The decision to use a cache is not an emotional matter.

Though caching has a very important role in application development, you need to run your use case through the lens of hard questions.

If you find satisfactory answers that point to the use of a cache, you will be able to reap the benefits in the long run. Otherwise, caching systems can become more of a liability.

In the coming weeks, I will be bringing much more detail about caching with practical examples.

So stay tuned!

Do you want to master NestJS - one of the fastest-growing frameworks in the Node.js ecosystem?

If yes, join the ProgressiveCoder Paid Subscription to get instant access to the course.

For more details about the course, check out the below link: