How to design a high-availability system on the cloud?

Cloud & Backend EP 5 - Two reusable patterns you can leverage

Hello 👋 and welcome to a new edition of the Progressive Coder newsletter.

The topic for today - How to design a high-availability system on the cloud?

To begin things, let’s understand the meaning of high-availability.

You call a system highly available when it can remain operational and accessible even when there are hardware and software failures. The idea is to ensure continuous service.

So, how can you design such a system? 🤔

The most reliable approach is to leverage static stability. 🚀

But before we get to the meaning of this term, it’s important to understand the concept of Availability Zones.

❓What are Availability Zones?

You must have heard about Availability Zones in AWS or other cloud platforms.

If not, here’s a quick definition of the term from the context of AWS:

Availability Zones are isolated sections of an AWS region. They are physically separated from each other by a meaningful distance so that a single event cannot impair them all at once.

For perspective, this single event could be a lightning strike, tornado or even an earthquake.

This definition created a tiny buzz on Twitter last week. I think the Godzilla attack had something to do with it.

To achieve this separation, Availability Zones don’t share power or other infrastructure. However, they are connected with fast & encrypted fibre-optic networking so that application failover can be smooth.

Here’s a picture showing the AWS Global Infrastructure from a few years ago.

The orange circles denote a region and the number within those circles is the number of availability zones within that region.

🤖 What’s Static Stability?

Availability Zones let you build systems with high-availability. But, you can go about it in two ways:

reactive

proactive

👉 In a reactive approach, you let the service scale up in another availability zone after there is some sort of disruption in one of the zones.

You might use something like AWS Autoscaling Group to manage the scale-up automatically. But the idea is that you react to the impairments when they happen rather than being prepared in advance.

👉 In a proactive approach, you over-provision the infrastructure in a way that your system continues to operate satisfactorily even in the case of disruption within a particular Availability Zone.

The proactive approach ensures that your service is statically stable.

A lot of AWS services use static stability as a guiding principle. Some of the most popular ones are:

AWS EC2

AWS RDS

AWS S3

AWS DynamoDB

AWS ElastiCache

If your system is statically stable, it keeps working even when a dependency becomes impaired.

For example, the AWS EC2 service supports static stability by targeting high-availability for its data plane (the one that manages existing EC2 instances). This means that once launched, an EC2 instance has local access to all the information it needs to route packets.

However, leveraging static stability is not just for the cloud provider. You can also use static stability while designing your own applications for the cloud.

Let’s look at a couple of patterns that use the concept of static stability:

🚀 Pattern#1 - Active-Active High-Availability using AZs

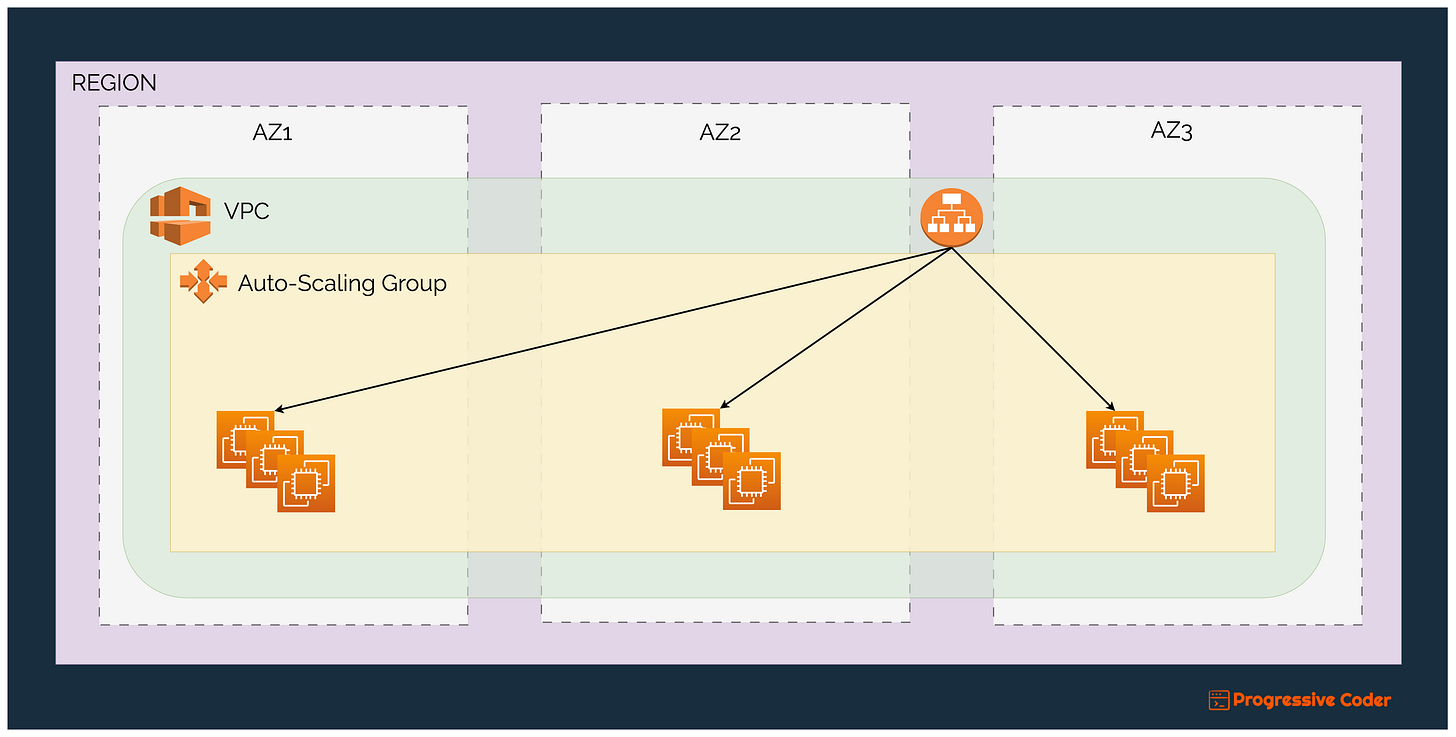

Here’s an example of how you can implement a load-balanced HTTP service.

You have a public-facing load balancer targeting an Auto-Scaling Group that spans three Availability Zones in a particular Region. Also, you make sure to over-provision capacity by 50%.

If an AZ goes down for whatever reason, you don’t need to do much to support the system.

The EC2 instances within the problematic AZ will start failing health checks and the load balancer will shift traffic away from them.

Since the setup is statically stable, it will continue to remain operational without hiccups.

🚀 Pattern#2 - Active-Standby on Availability Zones

The previous pattern dealt with stateless services.

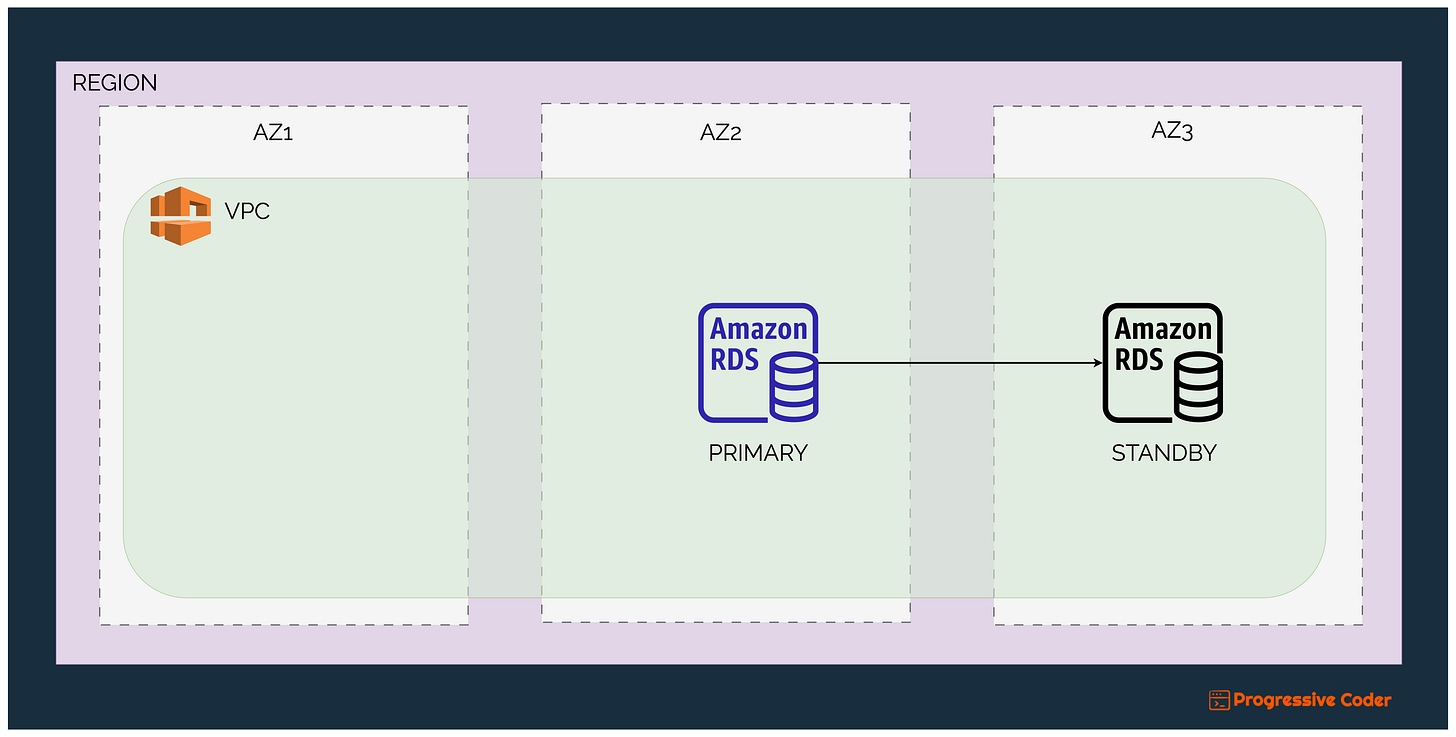

However, you might also need to implement high-availability for a stateful service. A prime example is a database system such as Amazon RDS.

A typical high-availability setup for this requirement needs a primary instance which takes all the writes and a standby instance. The standby instance will be kept in a different Availability Zone.

Here’s what it looks like:

When the primary AZ goes down for whatever reason, RDS manages the failover to the new primary (the standby instance).

Again, the service is statically stable.

🥡 So, what’s the takeaway?

In both patterns, you already provisioned the capacity needed in case of an Availability Zone going down.

In either case, you are not trying to create new instances on the fly since you have already over-provisioned the infrastructure across AZs.

This means your systems are statically stable and can easily survive outages or disruptions. In other words, your system is highly-available.

👉 Over to you

Does high-availability matter to you?

If yes, how do you handle it within your applications?

Write your replies in the comments section.

The inspiration for this post came from this wonderful paper released as part of the Amazon Builders Library. You can check it out in case you are interested in going deeper into the theoretical foundations of static stability.

If you found today’s post useful, consider sharing it with friends and colleagues.

Wishing you a great week ahead! ☀️

See you later.