Instance#1: Kafka Replication, Why Kubernetes Pods and More

Also, should Backend engineers learn DevOps?

Creating new instance…

Fetching dependencies…

Instance created successfully!

Hello, this is the ProgressiveCoder Publication and today is the beginning of something really cool.

The Instance Newsletter.

Twice every week (most probably, Tuesday & Thursday), you’d get a new instance of this newsletter packed with valuable content on software development and computer science.

In today’s edition, we talk about:

How replication works in Kafka?

The magic of Kubernetes Pods

Can Backend and DevOps roles become one in the future?

And, a little bit more…

Kafka Replication in a Nutshell

Kafka brokers have topics. Topics have partitions. We write data to partitions.

If a broker goes down, the partition is gone. And we can end up loosing data.

To avoid data loss, we need replication.

And to achieve replication in Kafka, we need to replicate partitions across multiple brokers.

The below diagram makes it clearer.

When a particular partition is replicated, it is also assigned to another broker.

As an example, partition 0 of Topic A is assigned to both Broker 1 and Broker 2. Same thing goes for partition 1 of Topic A.

But there’s something different about the two partitions.

When a partition is replicated across multiple brokers, one of the brokers acts as a leader for the partition. The other brokers are followers.

So how does it even matter?

On the consumer side, there’s no difference. Consumers can consume messages from both leaders and followers. However, Producers can publish messages only to the leader.

Leader brokers can also go down for some reason.

If the leader goes down, an election for the new leader takes place automatically. One of the followers emerges victorious and assumes the leadership position.

Replication directly impacts the overall reliability of your messages. But more on that in the next edition.

The magic of Kubernetes Pods

Containers have taken over the world. From shipping lanes to software deployments, containers are everywhere.

But containers in software deployments also create a problem.

The problem stems from the need to have a large number of containers for building any useful application.

Unless your application is small and individually managed, you’d most likely be splitting the various parts of your application into multiple containers.

However, multiple containers can often feel like an overkill.

If your app happens to consist of multiple processes communicating through IPC (Inter-Process Communication) or using local file storage, you might be tempted to run multiple processes in a single container. After all, each container is like an isolated machine.

But we need to avoid this temptation at all costs.

Containers are designed to run only a single process per container.

If we run multiple unrelated processes in a single container, we need to take responsibility of keeping those processes running. Also, we run into multiple maintenance issues.

Running each process in its own container is the only way to leverage the true potential of Docker containers.

But what about requirements where two or more applications or processes need to share resources such as the filesystem?

This is where Kubernetes Pods come into the picture.

A Pod represents a collection of application containers and volumes running in the same execution environment.

The same execution environment is important over here.

It means we can use pods to run closely related processes that need to share some resources. At the same time, we can keep these processes isolated from each other. Best of both worlds!

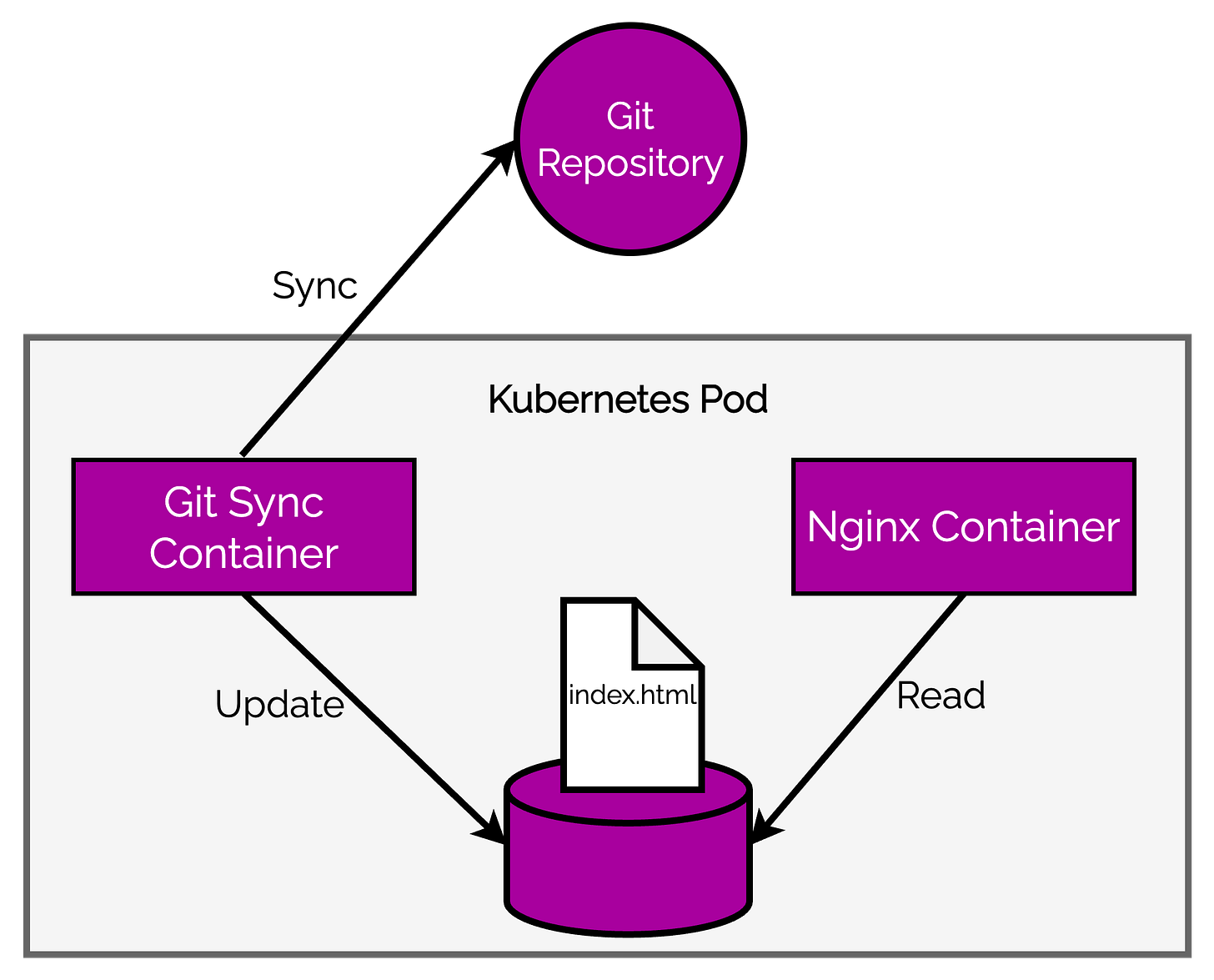

Look at this example application.

Here, our pod is running two applications in separate containers - one to fetch the latest HTML markup files from the Git repository and another to actually serve those files using an nginx server instance. Both of these containers share a common file system.

We built something like this in our earlier post on sidecar pattern. So if you are interested in diving deeper, do check it out.

Can Backend and DevOps roles become one in the future?

Most companies today are struggling with deployment challenges.

Building features is still happening at a brisk pace. But the real challenges start occurring when those features have to deployed.

Even simple changes take a long time to finally reach production. There are many intermediate steps and sometimes, different departments are involved. In some slow-moving setups, this time can be so long that developers who built a feature may have already left the organization by the time their feature reaches production.

However, even if every team has a dedicated DevOps individual for deploying infrastructure and new code, bottlenecks can result in case of multiple features.

Moreover, when the features finally reach production, any issues that might arise triggers the whole cycle once again. Often, developers are not able to understand why their beautifully written code failed in production when it worked perfectly fine in their machine. Must be the fault of DevOps!

What if the Backend and DevOps roles merged?

If a backend developer needs changes to a database table, they would usually pass the change to an ops team or the DevOps individual. The same happens when a new feature has to be deployed immediately and the DevOps team member is busy with some other task.

Things can move much faster if the back-end engineer knows how to handle the operations side of things as well. And I specifically mention back-end engineer because I feel they are more attuned to understand the ops part (with some training, of course) as compared to a typical front-end engineer.

Of course, there is a trade-off in this approach.

If you expect your developers to know more, you are moving to a more generalized setup. This setup can also backfire if you approach it from the know-it-all point of view.

On the other hand, smaller teams can benefit a lot from having this jack-of-all-trades approach. In my opinion, it works best when the team adopts a learn-it-all culture rather than a know-it-all.

What do you think about this debate? What have been your experiences working with Ops team and vice-versa? Do share your thoughts in the comments section below.

The Merits of Deep Work

Many of us struggle to balance out various tasks and distractions during the day.

Even when we think we are making progress, it turns out to be for some superficial goal such as attending a useless meeting. Moreover, the advent of open offices has resulted in cubicles giving way to concentration-sapping open floor plans. Even home offices can be a hotspot for distractions if not properly handled along with other family commitments.

Deep Work can help us achieve the right balance between real work and shallow work.

I came across this term when I first read the wonderful book Deep Work by Cal Newport.

Basically, Deep Work means professional activities performed in a state of distraction-free concentration that can push your cognitive capabilities to the limit. The activities performed using Deep Work creates new value, improves your skillset and are hard to replicate.

Of course, knowing what is Deep Work does not teach us how to perform Deep Work.

No reason to worry, though.

In the next edition, I will talk about some strategies for doing Deep Work.

So stay tuned until the next time!